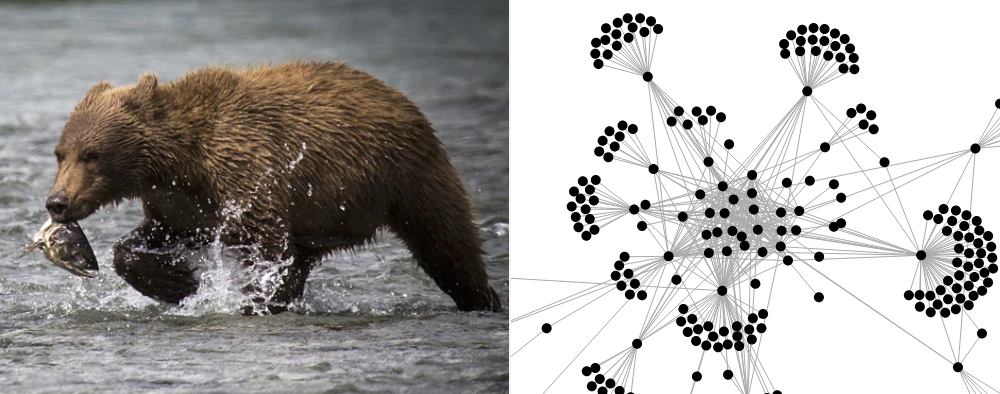

Homework 1: "Bear Crawl" Web Crawler (25 Points)

Chris Tralie

Bear image CC0 creative commons image courtesy of rawpixel.com

Learning Objectives

- Conceptualize a system of links as a graph data structure

- Implement breadth first search in a fun application

- Carefully read documentation about web scraping libraries

Description / Overview

In this assignment, you will create a breadth-first web crawler in python to discover all of the HTML pages on a web site, starting at some base url, up to some number of clicks. For instance, the viewer below shows all HTML pages that are within two clicks of https://www.ursinus.edu. Since we're the Ursinus bears, we may as well call this the "bear crawler" in that case 🐻. But the code will be general enough to work on any site, and students are encouraged to try this on other web sites and share results on the class discord!

This assignment starts us up at the very top of the application level at the top of the network stack. In subsequent assignments, we'll pull ourselves down to lower parts of the application layer and implement parts of the HTTP protocol directly in C, but for now, students will use a higher level implementation of this protocol in python's urllib library so they can focus on how to use the result of HTTP communication in a cool application.

Ethics of Web Crawling

One theme throughout the course is that with great power comes great responsibility. On the very day that I released this assignment (1/23/2025), there was an article on 404 media about how some AI companies are abusing grossly inefficient web crawlers to create their training data, and some creative solutions that web site owners have taken to combat this by creating infinite links on web sites (so-called "spider traps"). Any web crawler that is minimally compliant to a web host's wishes will read and respect the robots.txt file that specifies constraints on what a crawler should do. We won't do this since we're sticking to ursinus.edu, whose robots.txt file simply says that crawlers shouldn't follow any "reply to" links to avoid going into infinitely deep traversals. But we're doing a breadth-first search only up to a certain amount, so we'll never have to worry about this problem. And we're also being reasonably courteous by sleeping in between requests. But be mindful of this if you use your code on this assignment in other contexts, and be a good internet citizen!

Getting Started / What To Submit

Take a moment now to make sure that python is setup on your computer (you may want to look back at the data structures page about this).

There is no starter code for this assignment. Instead, students should create a Jupyter notebook called crawler.ipynb or a python file called crawler.py, which they'll hand in on Canvas at the end of the assignment. In addition to using the standard python library urllib to help with parsing URLs and loading HTML, I'd recommend that you use the Beautiful Soup library to find all of the links on a page. If you're having trouble installing this library on your computer, you can use a Jupyter notebook in Google colab.

Programming Tasks

If we consider a web page as a node in a graph, and we put an edge between two pages if there exists a link from one to the other, then a web crawler is just a glorified graph search algorithm. But the devil is in the details. First, in part 1, we have to do a fair bit of work to clean up the URLs so we don't accidentally mistake two versions of the same URL as two different pages. There is no perfect method of doing this, but we'll make some informed choices make a reasonably clean web crawler.

Then, before creating the crawler in earnest in part 2, you should review the breadth first search notes from data structures. You may also want to review my video on solving 8-puzzle with breadth-first search. You can borrow some of the code from these examples if you'd like, though you shouldn't necessarily need a graph class, since all I want you to do is return a list of edges, each of which is a pair of URLs that are connected by a link. Also, you can python's built-in deque instead of our custom doubly-linked list implementation from that class.

Part 1: Neighbor URL Parsing (7 Points)

Suppose we're at a web page https://www.ursinus.edu/offices/registrar/meet-the-staff and we see a link tag that looks like this:

The href part of the tag gives the link we want to follow to a neighboring page, but it is a relative path, not an absolute path. This is the norm with web sites to make them more portable. But we need to convert it into an absolute URL before we can visit it. Thankfully, the urljoin method of the urllib.parse library gets us most of the way there. For instance:

Will give us the link https://www.ursinus.edu/offices/academic-calendar. However, there is some more work we need to do to prepare our links for a good web crawler.

Your Task

Create a method next_path(base, href) that takes two parameters:

base: The URL of the current pagehref: The value of thehrefproperty of anatag in the current page that links to another page page

Your method should return a cleaned up string corresponding to the absolute URL of the neighboring page. First, do the following to base and url:

-

If

basedoesn't end in a file, add a "/" to it -

If

hrefstarts withwww., addhttp://to the beginning of it

Then, join base and href using urllib.parse.urljoin, and do the following modifications:

-

If the URL ends in

index.*, remove the end of it. For example, this will let us realize that the page https://www.ursinus.edu is the same as https://www.ursinus.edu/index.php -

If there is at least one

#character, remove the first#and everything following it. This is so that a page with an "anchor" isn't counted as a unique page (this is a subjective choice for a web crawler, but we'll stick with it in this assignment to cut down on the number of unique pages) -

Finally, if the URL ends in a

/, remove the/. For instance, we want to recognize that https://www.ursinus.edu and https://www.ursinus.edu/ are the same page.

Tests

If this is working properly, you should get the following outputs from your next_path method:

Input |

Output |

next_path("https://www.ursinus.edu", "/")

|

https://www.ursinus.edu |

next_path("https://www.ursinus.edu/offices/registrar", "../")

|

https://www.ursinus.edu/offices |

next_path("https://www.ursinus.edu/offices", "registrar/meet-the-staff/")

|

https://www.ursinus.edu/offices/registrar/meet-the-staff |

next_path("https://www.ursinus.edu/academics", "www.yahoo.com/CoolStuff/index.php")

|

http://www.yahoo.com/CoolStuff |

next_path("https://www.ursinus.edu/offices/marketing-and-communications/web-accessibility-statement", "#main-content")

|

https://www.ursinus.edu/offices/marketing-and-communications/web-accessibility-statement |

next_path("https://www.ursinus.edu", "live/files/5133-web-academiccalendar2024-25.pdf")

|

https://www.ursinus.edu/live/files/5133-web-academiccalendar2024-25.pdf |

next_path("https://www.ctralie.com/Teaching/Tutorials.html", "PoissonImageEditing")

|

https://www.ctralie.com/Teaching/PoissonImageEditing |

Part 2: BFS Crawler (18 Points)

Now we're ready to make the actual web crawler

Your Task

Create a method crawl_page(base_url, max_clicks, filename). The crawling should start at base_url and visit every page that can be accessed on that domain from base_url within max_clicks clicks by following a sequence of href values in <a> tags. You can use urllib.request.urlopen to open each URL and check its Content-Type, and then read()its data if its's the correct type. You can then use BeautifulSoup to find all of the <a> with href fields in this document.

Crucially, you should not visit pages that are on a different domain from base_url. So, for example, if my base_url is https://www.ursinus.edu and I see a link to https://www.ctralie.com/Teaching, you should not visit that link.

As you're crawling the website, create a list of edges, each of which is a list of two pages that are connected to each other by a link. Then, every time you discover a new link, save this info to a JSON file:

You can open this file up in graph viewer below to check your progress. As I mentioned before, edges, should be a list of lists of pairs of URLs that are connected by a link, in no particular order. Click here for an example of the edges on https://www.ctralie.com up to two clicks (note that self edges will happen since pages often refer to themselves on a navigation menu!).

Additionally, you should adhere to the following requirements as you're going along:

-

You should gracefully handle any errors without crashing. For example, if you can't load a particular URL, simply don't load and expand it. Have a look at python error handling to see how to do this using

try/catch - You should not visit a URL more than once. This is baked into the BFS algorithm, but be sure you're keeping proper track of the links you've visited somehow

-

We're sticking to HTML pages in this assignment, so you should only read the data from a URL if the

Content-Typein the header contains"text/html". This will prevent you from downloading things like videos or pdf files that will crash your code (not to mention wasting bandwidth) -

Sleeping

To be kind to the web site that you're crawling, particularly while you're debugging, be sure to sleep for at least one second between subsequent web page requests

NOTE: You might want to crawl https://www.ctralie.com/ as you're developing before you crawl ursinus.edu. It's a simpler web site, and I don't mind as much if you crawl me while you're debugging 😅

Graph Viewer

Below is a live applet that you can use to view graphs that result from crawling. I have four default graphs that you can play with, but you should be sure to test the graphs you're creating as you're going along by uploading your generated JSON files.

Choose File |

||

|

|

||

URLURL Will show here when you mouse over a node |

Force Directed Graph GUI Directions

- CTRL+Click to pan the view

- CTRL+Scroll to zoom in and out

- Left click and drag a node to move it around, and double click on a node to visit the corresponding page